The independent knowledge worker’s guide to generative AI

Become a prompt wizard and a bot whisperer to stay ahead of the curve

By now, we all expect massive productivity boosts across all industries due to automated workflows and new insights generated from number-crunching machine learning algorithms. But for many of us who aren’t the software engineers working with these technologies, the impact, so far, has felt intangible. Apart from the occasional question for Siri, our lives have not yet become a Jetsons-esque wonderland of man-machine convergence.

That is, until about last year, when publicly-available generative AI applications began to pick up steam. The big one was ChatGPT, an AI chatbot powered by large language models (LLMs) developed by OpenAI and released to the public in November of 2022. Within months of its release, it had become the fastest-growing consumer software application in history, after gaining over 100 million users.

ChatGPT isn’t the only chatbot superpowered by powerful LLMs. For example, Google released Bard, and Gather partner IBM released watsonx, its enterprise-focused alternative. These bots do more than just process text. OpenAI also manages DALL-E, a tool that generates digital images from natural language descriptions. MidJourney and Stable Diffusion are similar tools for conjuring imagery. OpenAI’s Codex parses natural language and generates code in response. Other platforms allow users to create video, music, and vocal clips from simple prompts.

According to a new report from McKinsey, the generative AI boom will be a boon for some, with estimated benefits reaching as high as $4.4 trillion. But these gains will necessarily come with disruptions. McKinsey’s researchers believe that knowledge workers will be primarily impacted.

Historically, major tech disruptions impact the working class first. The printing press, weaving machines, the automobile — these innovations put people who worked with their hands out of work. In contrast, our current disruption is going to hit knowledge workers (as defined by management consultant Peter Drucker) hardest. Those who trade in intangibles — numbers, words, images, ideas — are most vulnerable to this shift.

If you’re reading this newsletter, you’re probably a knowledge worker, so you, more than anyone, need to understand the implications of this technology. The whole McKinsey report is data-driven, substantive, and worth your time.

The research looked at 63 use cases for generative AI across 850 occupations. About 75% of the economic value will come from customer operations, marketing and sales, software engineering, and research and development.

McKinsey isn’t the only organization sounding alarm bells. In March, Goldman Sachs estimated that AI tools such as DALL-E and ChatGPT could automate 300 million jobs.

So what’s an independent knowledge worker to do? How can we stay ahead of the curve, and help our clients do so as well?

Under the hood

First, you’ll need to know how this stuff works. You don’t have to be a data scientist to grasp the fundamentals, and being able to explain these at a high level will make you look like a wizard to folks who can’t.

You’re not going to find a better introduction than Stephen Wolfram’s. It’s long, and gets a little technical in places, but it’s comprehensive and it’ll give you the confidence to explain how ChatGPT really works. The renowned computer scientist reverse-engineers the technology and explains what it’s doing in layman’s terms, boiling it down thusly:

…the actual neural net in ChatGPT is made up of very simple elements — though billions of them. And the basic operation of the neural net is also very simple, consisting essentially of passing input derived from the text it’s generated so far “once through its elements” (without any loops, etc.) for every new word (or part of a word) that it generates.

ChatGPT starts from a prompt, and then predicts “what comes next” based on probabilities. It is really that simple, and yet, seemingly magical. I’ve had moments with the technology that have left me stunned at its capabilities. Fellow Gatherer Nick Douglas, for example, prompted ChatGPT with a short story he’d written:

In the summer between high school and college, I sold kitchen knives from a catalog. I couldn’t convince people to buy much, but I did convince myself these were the best knives at any price. I did get my mom to order a jackknife for my dad’s birthday. It came in the mail; the package was in the car as my mom drove me to the airport for a church mission trip. In the passenger seat I opened up the knife to demonstrate the safety mechanism, and in the attempt I sliced open my palm. Next summer I sold donuts from behind a counter.

He asked it to rewrite the story in the form of a sonnet and got this back:

Upon the cusp of youth and wisdom’s gate,

I peddled blades of steel, their worth I’d preach.

My tongue, though silver, failed to captivate,

Yet of their excellence I did believe.A token for my father’s birth bestowed,

My mother, trusting, took the bait and bought.

The fateful day, to distant lands we rode,

My eager hands the instrument had sought.To prove its worth and demonstrate its guard,

I grasped it firm, but oh! the irony,

My palm did spill its crimson ink, a shard

Of pain and unintended injury.Thus, humbled, next I dealt in sweeter trade,

Where donuts’ siren call my future made.

I’m no poetry critic, but it’s sensical, it maintains a rhyme scheme, it contains all the facts of the story, and it even throws in some clever wordplay and imagery. Nick went through a few iterations with ChatGPT, making slight tweaks in his prompts, then added some of his own poetic flair to create a poem he saw fit to publish.

Gather gut check

We asked the Gather Network a few questions about generative AI, and our survey yielded a few interesting data points. It turns out that only about a third of us are using generative AI for paid work, likely due to the unpolished nature of these tools, and their propensity to yield incorrect answers. Most respondents said they believed their business will succeed in the era of generative AI, with more than half indicating that they expect to be able to wield AI “like a jedi.”

But on the other hand, 80% of respondents felt that they had only a “medium” level of knowledgeability about generative AI currently. The vast majority of respondents felt they could stand to learn more, with roughly the same amount demonstrating a desire to take advantage of educational opportunities that would help them learn to use generative AI tools for their business.

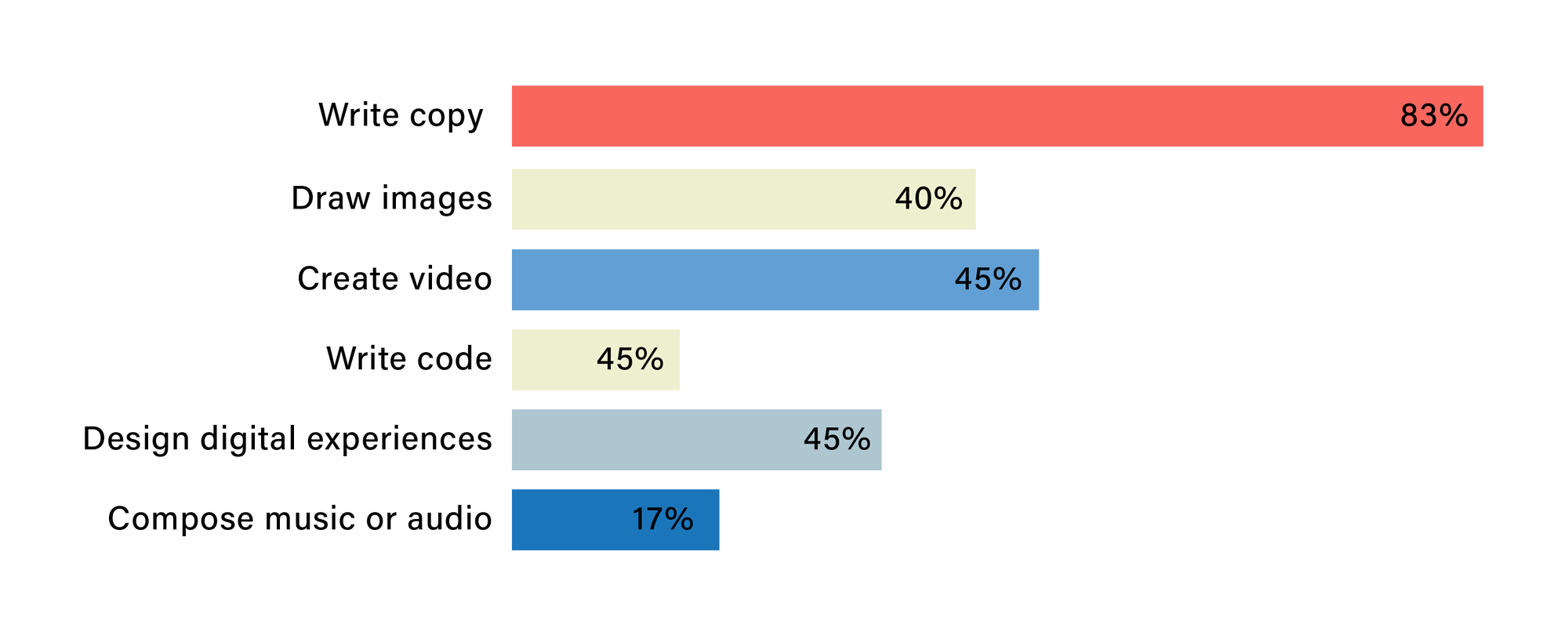

Gather folks are using generative AI (or want to use it) in different ways:

We gave respondents the chance to enter their own answers, too. The custom responses included:

• Research, strategy, modeling

• Brainstorm for client presentations

• Generate database queries

• Talk to customers

• Broad, introductory research

• Create outlines and starters for deliverables

• Write lesson plans

• Create contracts

Every single person I’ve spoken with about ChatGPT told me that they see it as a springboard or starting point, a way to avoid tedious early work, rather than a wholesale solution. That might change as these apps get smarter, but for now, people are still necessary for creative work.

One anonymous survey respondent reflected these concerns, summarizing the feelings of many within the Gather Network:

“I do think there’s a lot of unwarranted hype around generative AI, particularly for copy generation. I’ve caught it making things up and regurgitating false information multiple times, and the level of copy it generates is generic at best. If organizations rely on it too heavily, I fear a future in which the internet is awash in garbage content and Google and nearly unusable. That said, I do think there’s room for AI. It may require fact-checking, but it is an overall decent research assistant. It is also the single best writer’s block destroyer I’ve found so far, which may be its biggest value to me personally. If I don’t know how to begin or what to do next, I ask ChatGPt to write it for me. In editing what it gives me, I usually figure out what I actually want to say. That alone is worth the price of admission for me.”

Our survey told us that there was some unease around the topic, but mostly a lot of optimism and excitement. A lot of people are using (or want to use) these tools, but they want to do it right, and do right by their clients. So let’s explore how to do that.

Surviving and thriving in a more automated world

In an interview with Bloomberg, LivePerson founder and CEO Rob LoCascio (the guy who invented browser-based chat widgets), talked about how he is training his vast staff of customer service representatives to not only work alongside generative AI-powered chatbots, but to actually take part in designing them.

“Four or five years ago, everyone thought bot builders should be tech people or data scientists,” said LoCascio. But he thinks the world needs millions of so-called “conversational designers” to help create bots that are better able to handle the nuances of human conversation, problem solving, and parsing the emotional content of customer complaints and queries. LoCascio describes the average call center agent as a world-class expert in the art of solving problems quickly and effectively, and he argues that their emotional intelligence is the skill-set needed to build chatbots. Being able to code is secondary.

OK, so you don’t have to be a developer to wield or even design generative AI tools. So how are you going to make yourself useful as these tools continue “eating the world”?

Here are four tips:

1. Understand the limitations

A lot of people are comparing ChatGPT changing how we write to how a calculator changes how we do math. This analogy doesn’t really work — a calculator gets you the exact answer you are looking for. ChatGPT and MidJourney, in my experience, tend to give answers that are, at best, halfway there. When it comes to summarizing a topic or putting together an image, they enable me to bypass a lot of the legwork, but the output must be rigorously re-prompted, shaped, massaged, fact checked, and double fact checked. It’s a good practice to assume that initial outputs are usually nowhere near ready for primetime.

Just like humans who make the data upon which the algorithms feed, generative AI platforms are fallible. They can only, in a sense, regurgitate information that’s already out there. They don’t comprehend the underlying reality that language describes — they can only plot out text that is semantically correct within the context of a prompt. This can result in a phenomenon called a hallucination. A ChatGPT hallucination would result in an incorrect output with some assertion, such that you would naturally take such facts as truth. Hallucinations can be especially dangerous in the context of client work — the last thing you’d want is a client finding a completely bogus assertion in a piece of writing they paid for, especially when it’s an assertion that a 10-year-old could’ve spotted. But such hallucinations happen routinely, because the bots aren’t thinking like humans. They’re only looking for statistically probable ways to put one word in front of the other. For example, lawyers trying to quickly create legal documentation have found that the software will reference completely fabricated legal precedent that “sounds” real, but isn’t. Here’s a good podcast discussion on how and why hallucinations happen across different generative AI platforms.

Another limitation is the bias problem. Bias refers to a deviation presented by AI models in its prediction, due to several factors. Some models are poorly designed, and aren’t fit to the task at hand. And even well-designed models are only as good as the data used to train them. The “garbage in, garbage out” principle applies here. Because tools like ChatGPT scrape the internet to form its answers, they are picking up crappy, low-quality data along with the good stuff. Generative AI tools frequently replicate human biases in this way. See how bias can impact image generation in this article from Bloomberg.

Any content generated by AI must be meticulously fact-checked by a human before being published. Sometimes those fact-checkers are editors, sometimes they are engineers, lawyers, or other subject matter experts. Even specialized AI ethicists are required for some use cases. Understanding the limitations of the technology can help you avoid steering a client into dangerous territory.

2. Get permission and proceed with caution

The fear around generative AI is real, for all the reasons described above, and then some. Apple has restricted employees from using ChatGPT on work devices. JP Morgan Chase, Wells Fargo, Bank of America, Goldman Sachs, and Deutsch Bank have banned it entirely. Samsung employees were caught feeding sensitive source code into ChatGPT to optimize it, disregarding the possibility that this data would be stored and used to further train ChatGPT — according to the terms of OpenAI’s data usage policies, the information could be shared with the company’s “other consumer services.” Even the entire country of Italy banned it.

Consultants need to be aware of the risk of uploading even seemingly non-sensitive information within the context of their contractual relationship with a business. A good rule of thumb: if you wouldn’t share the information with your client’s direct competitor, don’t share it with ChatGPT — and even then, err on the side of caution. Self-imposed bans and limitations on ChatGPT are becoming increasingly common among companies.

Another of our survey respondents wrote:

I’d like to have guidance on how to handle generative AI with clients. Like, how to bring it up, to gauge how comfortable they are with us using it on work for them. I don’t think they are comfortable with it right now, so I don’t use it, but I would like to at least be able to open the conversation with them at some point without ruffling feathers.

As a general rule, be open and honest with clients about using generative AI, and consult with their legal department if your usual contacts aren’t sure whether the company has an official policy regarding its use.

3. Become a prompt wizard

Once you’ve gotten approval and feel comfortable using generative AI for work, it’s time to upskill. You need to become a prompt wizard.

A wizard uses specialized knowledge, instantiated in rituals and spells, to achieve specific goals. Working with generative AI puts you in the position of spellcaster. In generative AI parlance, such spells are called “prompts,” and they can be as simple as “Draw me a picture of Abraham Lincoln playing Xbox,” or an elaborate string of parameters designed to yield extremely specific results:

This result is a good example of how generative AI gives you a starting point rather than a finished product! There’s a bit of Lincoln, a hint of Xbox, but it nailed the pastoral environment.

Anyway, you can find generators that will suggest elaborate prompts based on simple prompts at an open-source AI community called HuggingFace. I used HuggingFace’s Midjourney Prompt Generator to produce the above prompt. You can find prompt generators and many other generative AI tools there as well.

One of our anonymous Gather survey respondents made the following suggestion:

I’ve recently been exploring the Open AI Discord server where they’ve created this channel called “AI Prompts Library.” This has blown my mind. There are people building whole operating systems using the GPT website and running them right on the OpenAI site. The types of things I’m beginning to see around Natural Language Coding are WILD. And the prompts just keep getting better and better.

You know how you can supercharge Google using special search operators? For example, to search a specific site, you can search “site:xyz.com pizza”, which will enable you to search for the word “pizza” at a specific domain rather than the entire web. You can use the word “and” to search for two terms at once. You can use “filetype” to search for PDFs or Powerpoint decks. There are dozens of these operators.

Well, these generative AI tools blow this extended functionality out of the water. If you know the right prompts, you can manipulate the results like a wizard. Here are some examples for use with ChatGPT:

• Get your answers as a table, and then manipulate that table using prompts. This allows you to whip up a useful spreadsheet filled with data in seconds.

• Ask for an answer using the voice of your favorite author, or from the perspective of a generic CEO or an accountant.

• Limit output to a specific word count, or ask for an answer in the form of a top ten list. You can ask it to avoid using the Oxford comma.

• Ask for an essay for an audience of software engineers, or an audience of 10-year-olds.

• Ask for help generating more creative prompts for MidJourney or other tools.

• Copy-paste a half-finished piece of writing and ask ChatGPT to finish it. If you don’t like the results, try to give it direction like an editor would: Shorten the intro. Expand the conclusion. Remove the reference to X. Insert two paragraphs that demonstrate opposing viewpoints on Y issue.

These are just a few prompt ideas for ChatGPT alone. You can experiment and find prompts that are nice to have on hand. Fortunately, ChatGPT remembers all your queries, so you can recall back to prompts that have worked for you in the past.

4. Become the bot whisperer

Once you’ve learned how to effectively use these platforms and have familiarized yourself with the fundamentals of how they work, you may be able to convince a client to pursue a project involving them. Aside from you directly using generative AI, think about how you can save your clients money by strategically integrating these tools into their workflows.

The Harvard Business Review reports that Heinz, Nestle, Stitch Fix, and Mattel are already using DALL-E and similar tools for image generation in experimental contexts. Can you be a change agent within client organizations to help them understand the risks and opportunities involved?

Deloitte conducted a six-week experiment where they had 55 developers using OpenAI’s Codex generative coding tool. They determined that the resulting code was at 65% accuracy or better, and found an overall 20% improvement in code development speed. Deloitte’s not going to be laying off all their software engineers any time soon, but the experiment demonstrated the limited benefits of the technology.

Maybe you could help run similar experiments with your clients? Maybe you could help turn your client’s internal knowledge base into a chatbot? Maybe you could lead an isolated AI-generated content development project? For a glimpse into how Gather has used generative AI tools to supercharge client work, tune into the Dispatch podcast episode with designers Hilary Davis and Chiara Bartlett, who explain how they leveraged the technology to create at massive scale with IBM.

Man vs. Machine: If you can’t beat ‘em, join ‘em

If you can convince your client to pursue any generative AI project at scale, it will need to be a cross-functional collaboration involving not just designers, but risk managers, legal and compliance officers, and buy-in from across the C-suite. Deloitte has published an analysis of the likely key stakeholders for such a project and a list of questions you can ask to help your client design and manage a generative AI project successfully. They recommend harmonizing any project within the company’s existing strategy, among other tips.

Knowledge workers who make content needn’t worry that AI is going to take their job. The generative AI era is just beginning, and with some self-education, you can ride the crest of that wave. These are tools that may replace some workers, just as the weaving machine eliminated some textile jobs. But there’s no reason why you can’t use these tools to your advantage, and show your clients how they can equip their people to do more by exploiting the tools rather than fearing them. We should expect this sector to evolve extremely quickly, continuing at the turbocharged pace set last year. With the right approach, you can help clients navigate around justified concerns, and move quickly to obtain business value that’s just sitting there, waiting to be captured.

Thanks for joining us for the Summer Dispatch. If you’d like to hear more about where generative AI is taking us, check out the Gather Dispatch podcast, hosted by Jason Oberholtzer and Mimi Sun Longo. They recently recorded an episode on this very subject.

Header image Prompt:

“wide shot of group of knowledge workers at a table working on a podcast for a client, one prompt wizard, one bot whisperer, one stylish man with a leather jacket, one stylish woman with green hair, one stylish man with a bald head and beard, one stylish man with orange hat, 8k, hd, octane render, realistic, 16:9”